Misconceptions of AI-Driven Attacks in Infosec

Some voices in the infosec community dismiss the threat of AI-driven attacks as hype, pushed by well-funded cybersecurity firms. However, this perspective misses an important point. We have two key realities:

1) AI can significantly enhance efficiency in performing routine technical tasks

2) Most successful cyber attacks, like ransomware, rely on standard methods (and increasingly often just system tools) rather than magical zero-day cutting-edge techniques

Given this, the potential for AI in cyber attacks is evident. Are we seeing widespread use of AI driven ransomware ops yet? No, but it’s not because they’re infeasible. The current limitation stems from the supporting infrastructure being not quite there yet - frameworks for creating AI-driven applications, such as LangChain, are very new to the market and require substantial handcrafting to pull simple applications out.

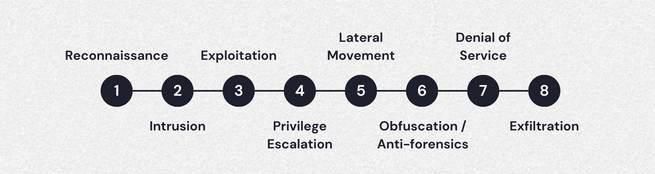

A successful cyber attack takes multiple steps, so an attacker would need to chain logic for handling multiple stages of attack together and creating AI logic to handle these steps. Developing AI to handle these stages comprehensively hasn’t been worth the effort - yet.

However, given that there is a huge amount of effort being invested into creating AI enabled applications - among other subdisciplines, in creating autonomous agents - frameworks such as these are becoming increasingly user friendly by the day, lowering the barrier of entry for modifying it for malicious use cases.

But Won’t Defenders Get the Same Gains?

Because of the cybersecurity asymmetry (defenders need to win all battles, attackers need to win once), creating an end-to-end successful offensive AI is far simpler than developing a defensive AI with the same success rate. Defenders would need to recurringly win all battles, protecting all access attempts plus detect & mitigate any initial breaches, plus fixing open vulnerabilities and weaknesses, plus do so without breaking production, and so forth. The complexity of a defensive AI system is far, far higher than an offensive one.

Cybersecurity also recurringly experiences scenarios where vast amounts of organizations become simultaneously vulnerable because of critical vulnerabilities being discovered in commonplace software or hardware.

Consider then a scenario like a Log4J 2.0 - i.e. a vulnerability that makes about half of the organizations globally exploitable. An AI agent, using boilerplate techniques after initial access, can effortlessly ransom, say, 10-20% of these victims - constrained only by the calculation speed of the underlying GPU - and ultimately leading to what could be genuinely described as the cyber 9/11 the cybersecurity community has been talking about for ages.

“Thankfully Hackers Are Only Using AI To Develop Phishing Emails For Now”

False. Based on Github user cybershujin’s Adversary use of Artificial Intelligence and LLMs list, phishing has already been superseded by Mitre ATTACK technique T1588 Obtain Capabilities: Tool, which is a high-level technique describing the capability for adversaries to “support their operations throughout numerous phases of the adversary lifecycle”, per MITRE.

Full list of observed techniques and their occurences is below:

| Technique ID | Technique Description | Number of Occurrences |

|---|---|---|

| T1588 | Develop Capabilities: Tool | 7 |

| T1566 | Phishing | 6 |

| T1587 | Develop Capabilities: Malware | 4 |

| T1587 | Develop Capabilities | 3 |

| T1593 | Search Open Websites/Domains | 3 |

| T1588.006 | Obtain Capabilities: Vulnerabilities | 2 |

| T1592 | Gather Victim Org Information | 2 |

| T1059 | Command and Scripting Interpreter | 2 |

| T1562.001 | Impair Defenses: Disable or Modify Tools | 1 |

| T1564 | Hide Artifacts | 1 |

| T1114 | Email Collection | 1 |

| T1565 | Data Manipulation | 1 |

| T1657 | Financial Theft | 1 |

| T1583 | Acquire Infrastructure | 2 |

| T1195.001 | Supply Chain Compromise | 2 |

| T1568 | Resource Development | 1 |

| TA0003 | Persistence | 1 |

| TA004 | Privilege Escalation | 1 |

Phishing is evolving rapidly alongside other techniques, and the infosec community’s focus on either a single method or some mythical, ultra-advanced hacking techniques misses the point. Current AI doesn’t need to perform high-tech wizardry to be effective. The real threat isn’t a rare, unforeseeable “black swan” event; it’s a very plausible scenario that could happen within the next couple of years with the right resources. A grey-white swan with embedded AI.

“Squishy interiors” have long been the Achilles’ heel of organizational cybersecurity, but this is becoming increasingly unsustainable. Strengthening internal defenses is crucial. We may soon face a new type of dynamic where attack speeds become faster than human defenders can react and respond.

We must adopt an assume breach mentality.